Projects

AVERO Focus Project ETH Interview (78k views!) Link to heading

Reinforcement Learning Controller for the Omnidirectional MAV AVERO Link to heading

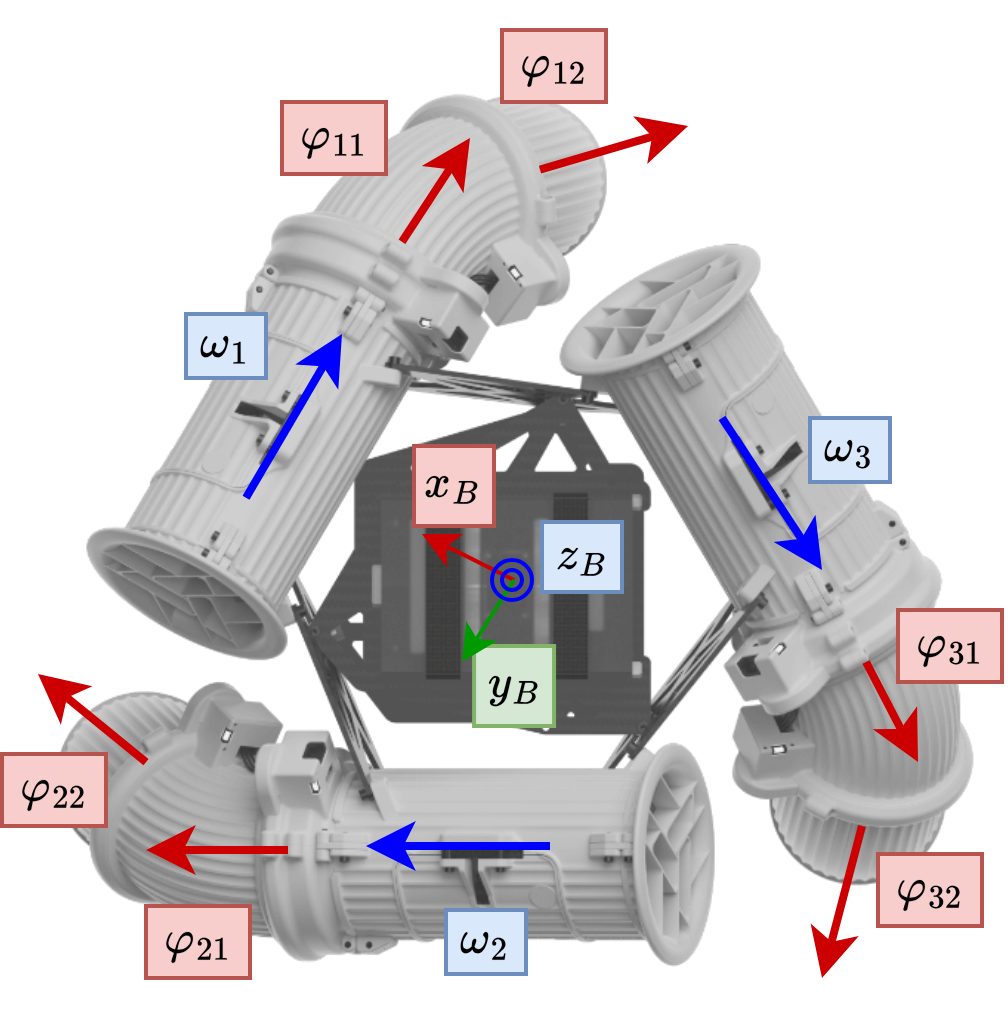

This 6.0 / 6.0 Bachelor Thesis at the Autonomous Systems Lab extends the work of the focus project AVERO by developing an alternative controller using Reinforcement Learning techniques. The Avero drone is an overactuated aerial robot, it has 9 actuators to control 6 degrees of freedom of space. It’s design hides dangerous propellers inside ducts and uses swivel nozzles to redirect the thrust, enabling omnidirectionality.

The Avero drone is actuated by three electric ducted fans $\omega_i$ and 6 swivel nozzle joints $\varphi_{ij}$

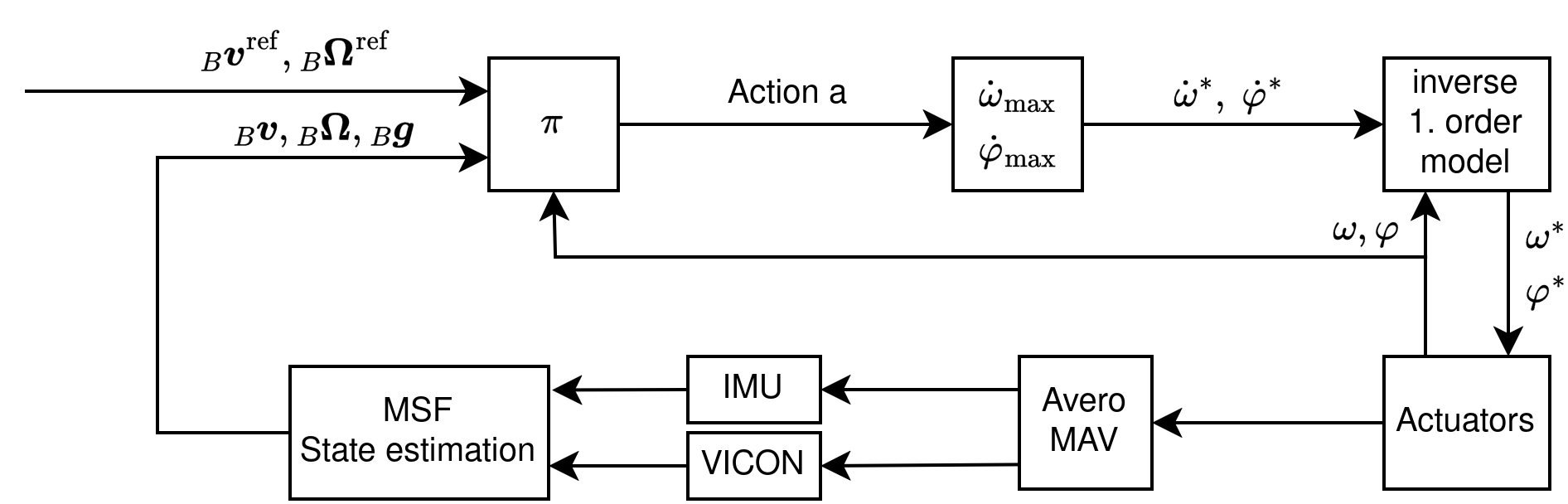

The math involved in stably hovering such an overactuated MAV is quite advanced. In the bachelor thesis I explored an alternative by training a reinforcement learning policy to learn by trial and error how to fly. I used real flight data to model and simulate the MAV and created a Gymnasium environment to train the RL policy.

Control Systems diagram with the RL policy $\pi$

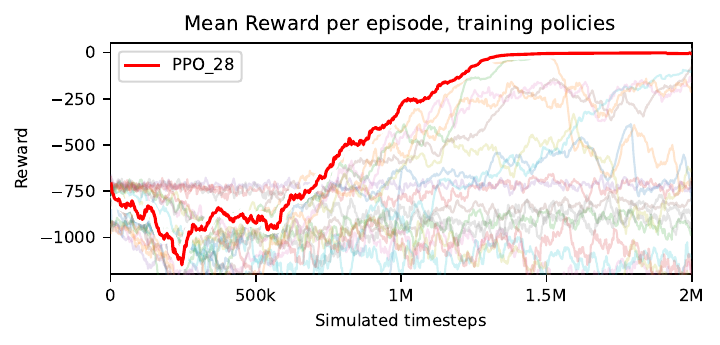

The simulation enables the RL agent to gather a lot of flight experience. In about 30 minutes real time, the agent has flown over 6 hours in simulation and has crashed about 100'000 times! It get’s punished whenever it crashes and rewarded for hovering stably. This required a lot of finetuning, but after many failures, I managed to train a policy to stably hover the MAV!

Training RL Policies, PPO_28 is highlighted as it reaches zero negative reward

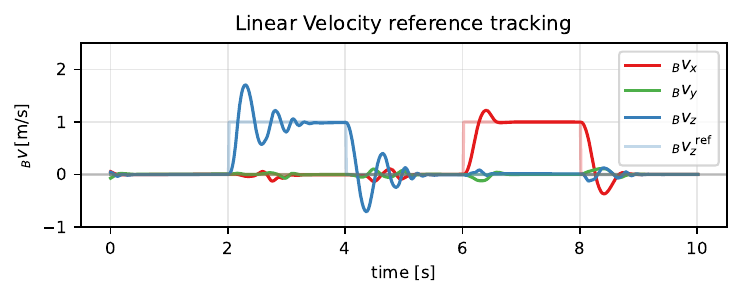

I then evaluated the RL controller in simulation on parameters it wasn’t trained on. Below a plot of the linear velocity tracking can be seen. It first stably hovers at zero velocity, then it tracks $1 \frac{m}{s}$ references in z- and x-direction

Linear velocity tracking in simulation

Hands-on Deep Learning Link to heading

Semester course at ETH Zürich introduced deep learning through the PyTorch framework in a series of hands-on exercises, exploring topics in computer vision, natural language processing, audio processing, graph neural networks and reinforcement learning

Hands-on Self-Driving Cars with Duckietown (6.0/6.0 project) Link to heading

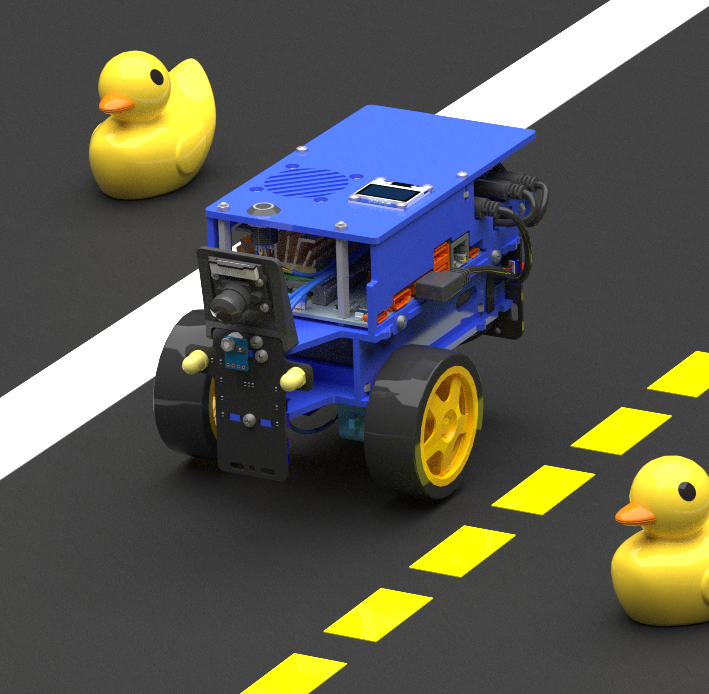

Duckietown is an educational robotics platform designed to teach autonomy using miniature self-driving vehicles. It simulates real-world challenges like lane following, obstacle avoidance, and vehicle coordination in a scaled-down city environment. Built with affordable hardware and open-source software, Duckietown is used in top universities (including ETH Zurich and MIT) to explore perception, control, and reinforcement learning in robotics.

Duckiebot

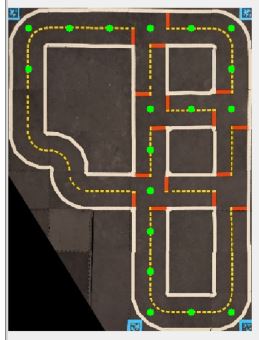

Our project had the goal of localizing the Duckiebot in a top-down picture of the real map. The real world analogy would be localizing a car in a city using it’s onboard sensors and a satellite picture of the city. We used onboard sensors (Wheel encoders and IMU) to estimate the odometry and a search algorithm determined the Duckiebot’s position in the map.

Odometry of the Duckiebot

Corresponding Location based on the Odometry